Are Deep Learning Classification Results Obtained on CT Scans Fair and Interpretable?

Following the great success of various deep learning methods in image and object classification, the biomedical image processing society is also overwhelmed with their applications to various automatic diagnosis cases. Unfortunately, most of the deep learning-based classification attempts in the literature solely focus on the aim of extreme accuracy scores, without considering interpretability, or patient-wise separation of training and test data. For example, most lung nodule classification papers using deep learning randomly shuffle data and split it into training, validation, and test sets, causing certain images from the Computed Tomography (CT) scan of a person to be in the training set, while other images of the same person to be in the validation or testing image sets. This can result in reporting misleading accuracy rates and the learning of irrelevant features, ultimately reducing the real-life usability of these models. When the deep neural networks trained on the traditional, unfair data shuffling method are challenged with new patient images, it is observed that the trained models perform poorly. In contrast, deep neural networks trained with strict patient-level separation maintain their accuracy rates even when new patient images are tested. Heat map visualizations of the activations of the deep neural networks trained with strict patient-level separation indicate a higher degree of focus on the relevant nodules. We argue that the research question posed in the title has a positive answer only if the deep neural networks are trained with images of patients that are strictly isolated from the validation and testing patient sets.

Methodology

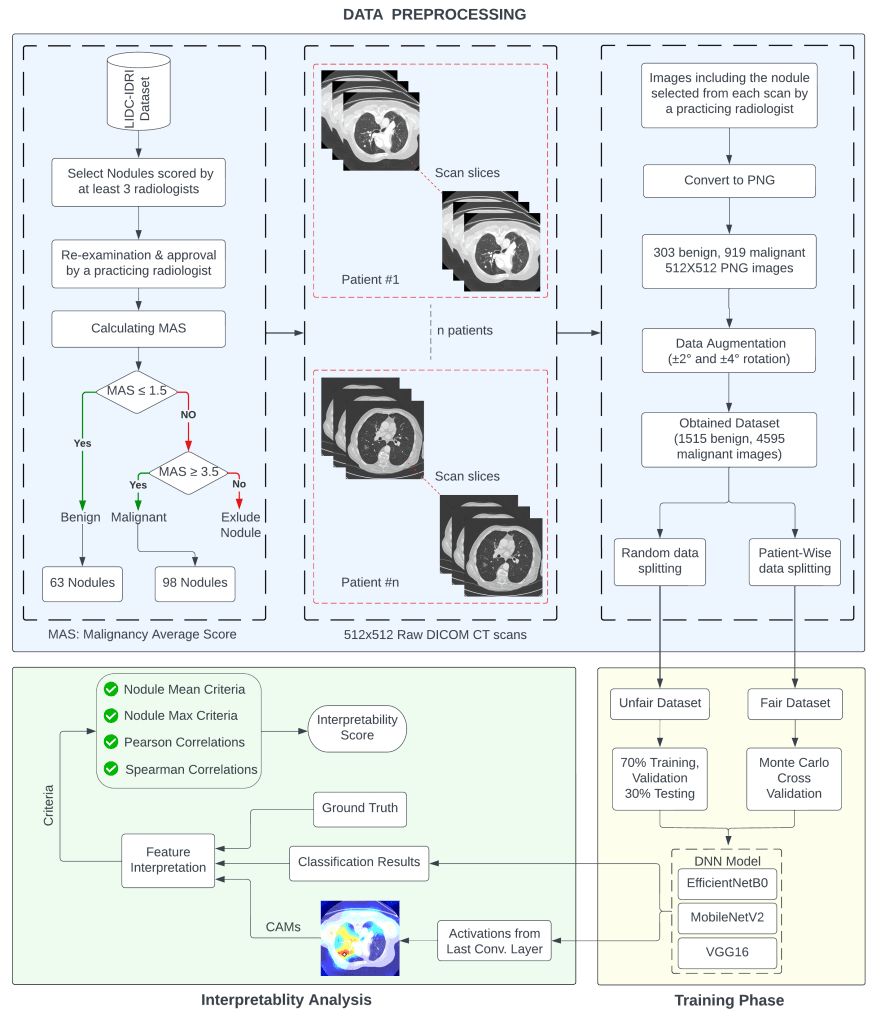

Starting from the construction of the dataset and ending at the interpretability measurements, the overall methodology comprises several process steps. The complete layout of the methodology is shown as a large flowchart below.

Training The Models

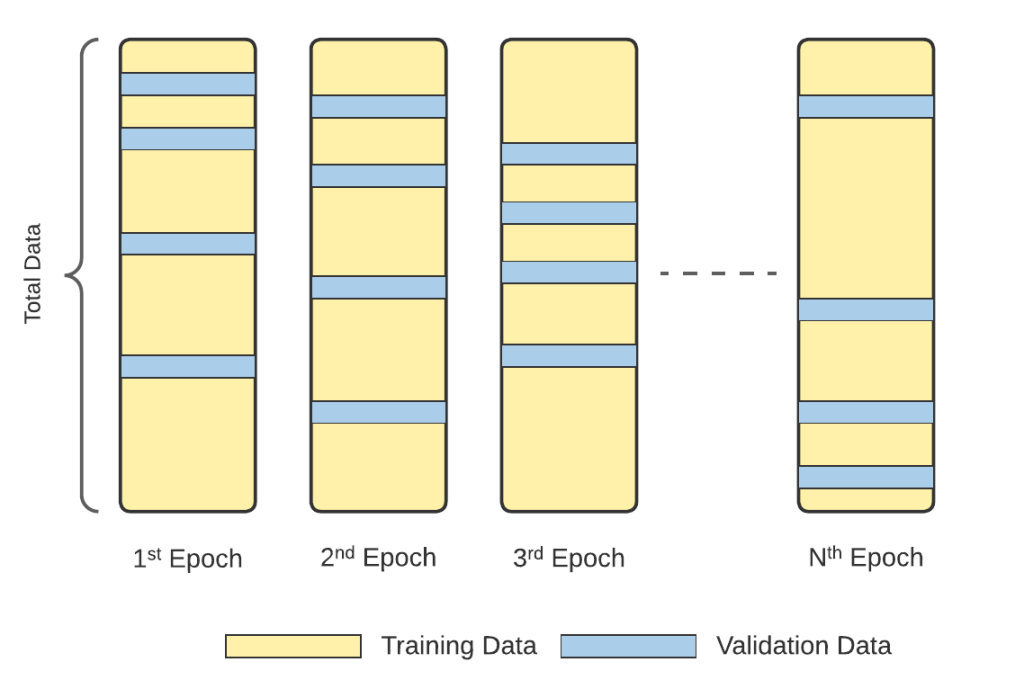

The improvement in the learning process and validity of the reported accuracy results are ana- lyzed. Figure 3-b clearly shows that the proposed training process gradually improves in time, and no inconsistent overfitting occurs. Furthermore, the resultant networks provide more reliable accuracy results, as will be explained in Sec. 3.

Monte Carlo CV

To avoid overfitting and achieve reliable accuracy results, separate folders that contain CT scans of different patients were constructed in the second experimental set, which we call the FAIR training procedure. Monte Carlo Cross Validation (MCCV) was applied to shuffle the patients (with all of their CT scan images) during training, validation, and testing.

Interpretability Results

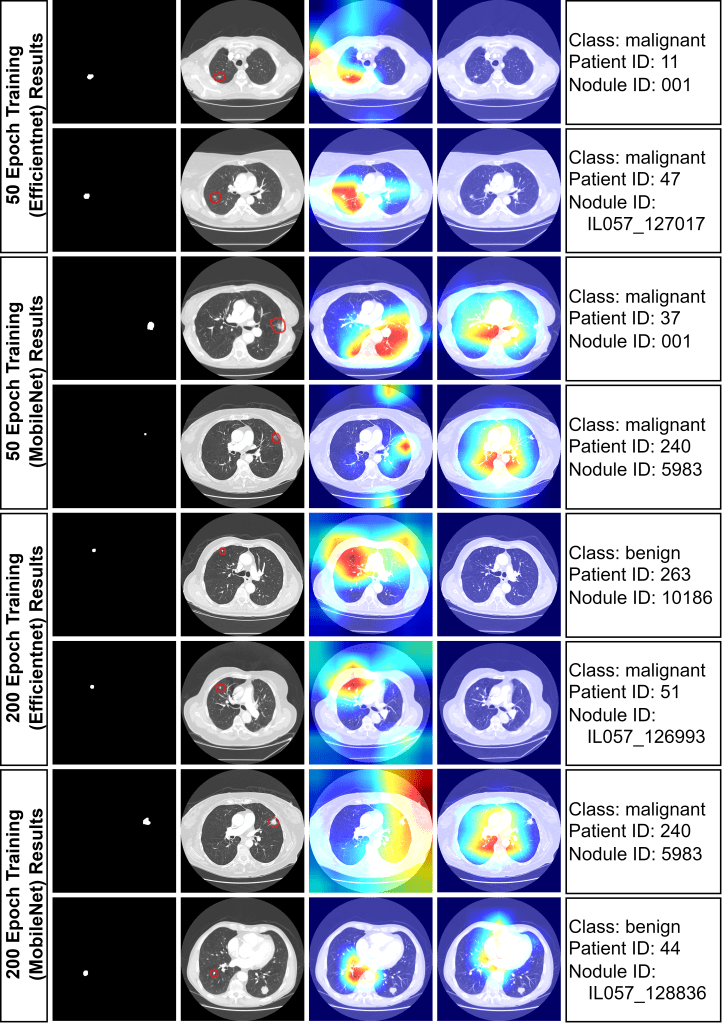

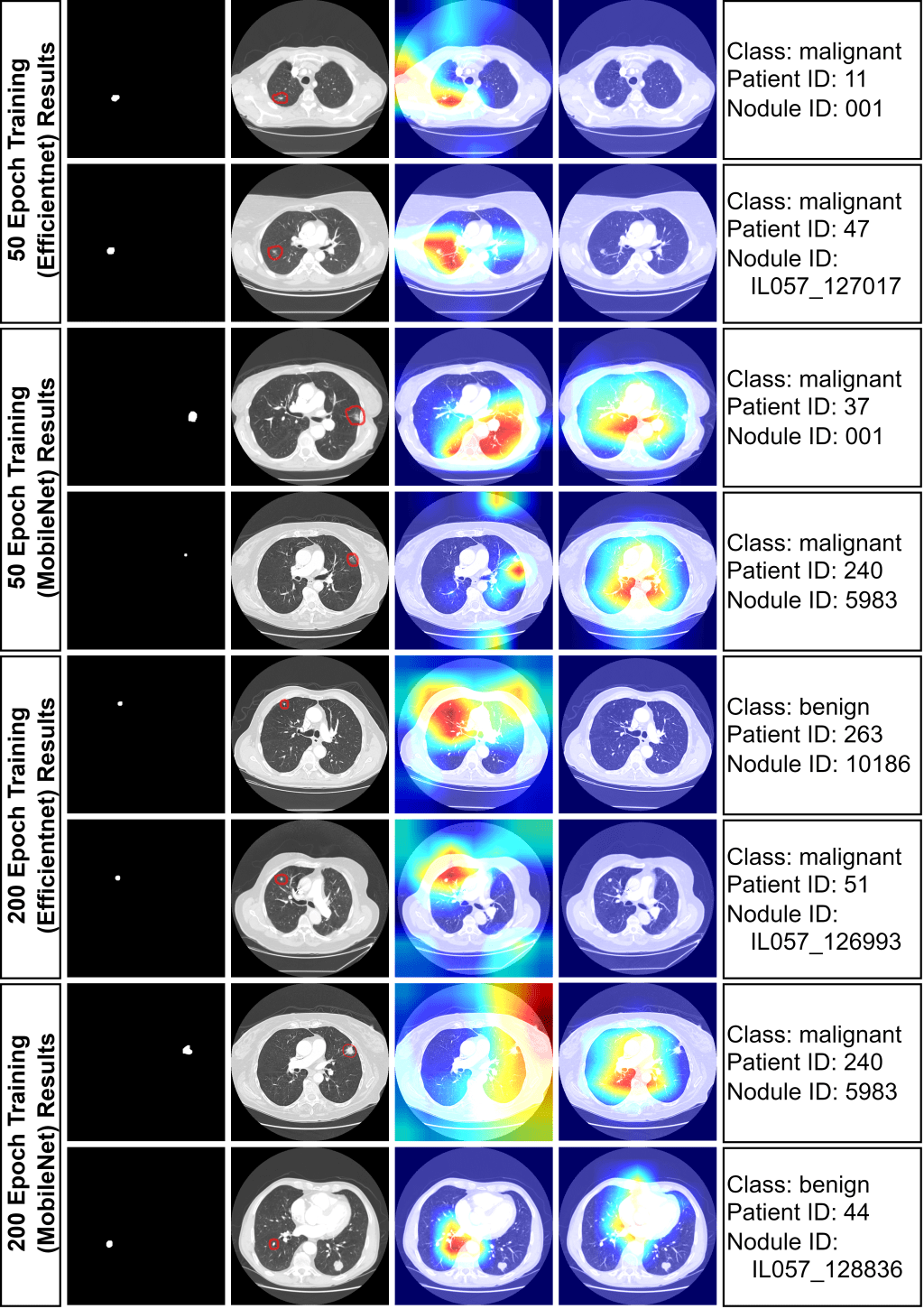

To demonstrate interpretability, 8 images were randomly chosen for analysis with both fair and unfair models. Heat maps, generated from the last convolutional layers of MobileNetV2 and EfficientNet, highlighted lung regions influencing model decisions . Red areas indicate stronger activations. The unfair model often incorrectly focused on non-tumor areas for malignancy predictions, while the fair model correctly concentrated on tumor regions. This indicates the unfair model’s unreliability, likely stemming from overfitting due to improper train/test splitting.

https://link.springer.com/article/10.1007/s13246-024-01419-8: Are Deep Learning Classification Results Obtained on CT Scans Fair and Interpretable?

Leave a comment