Neural Network Representations for the Inter- and Intra-Class Common Vector Classifiers

Common Vector Approach (CVA) is a known linear regression-based classifier, which also enables an extension to inter-class discrimination, known as the Discriminative Common Vector Approach (DCVA). The characteristics of linear regression classifiers (LRCs) enable the possibility of a schematic implementation that is similar to the neuron model of artificial neural networks (ANNs). In this work, we explore this schematic similarity to come up with an ANN representation for both CVA and DCVA. The new representation eliminates the need for projection matrices in its implementation, hence significantly reduces the memory requirements and computational complexities of the processes. Furthermore, since the new representation is in a neural style, it is expected to provide a solid and intriguing extension of CVA (and DCVA) by further incorporating adaptation or activation processes to the already successful CVA-based classifiers.

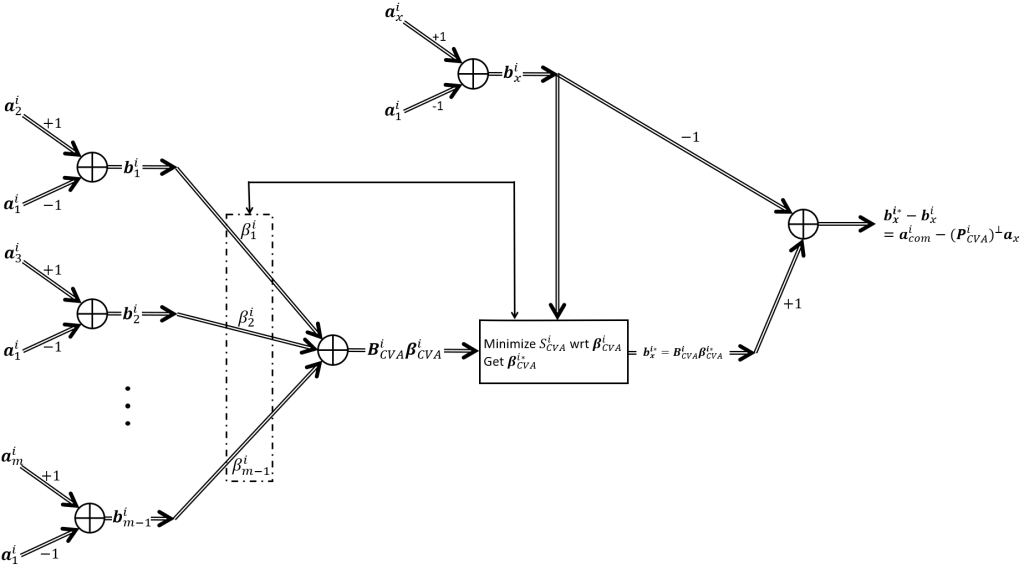

The neural net equivalent of CVA in which all neurons are ADALINES (Neurons with Linear activation functions) is given in Fig. 1. The norms of the outputs of each class are compared for the classification of ax. The first layer in the network is used for obtaining the difference vectors of the type

for the vectors in the training set of each class. The outputs in the first layer turn out to be the difference vectors in each of the classes. Then each difference vector is multiplied with a scalar βji with i=1,2,…,C and j=1,2,…,m−1 in order to obtain the matrix BiCVA for i=1,2,…,C.

This paper is published at Digital Signal Processing journal. For details about this work, you may continue reading it in here: https://www.sciencedirect.com/science/article/pii/S1051200423003007